Remote Simultaneous Interpretation bears risks and opportunities alike. To engage in a debate based on facts rather than conjecture, we are collating audiograms from a wide gamut of settings. Please log onto our questionnaire below and upload your audiograms before/after Sim classes.

HeiCIC, 13.01.2020

Hearing risks from non-ISO, VideoChat-based RSI

Looking at our profession’s demographic combined with the indispensability of good hearing for good work, the relevance of a medical study on the matter is obvious.

Combine that with the plethora of mistakes that have been made to ruin people’s hearing in the past (see article below), it is no surprise that many colleagues are suffering hearing deterioration faster than the average person does.

In times of Covid-19, many of us have tried not to let down our customers and worked under dodgy conditions. I will try to elucidate what this is doing to your hearing. The information below was researched for an AIIC Workshop on New Technologies and bone conduction headsets to improve psychoacoustic balance in conjunction with the programmable DSP in Zik Parrot headphones controlled by the hearing aid manufacturer Pyour Audio used as a DAW:

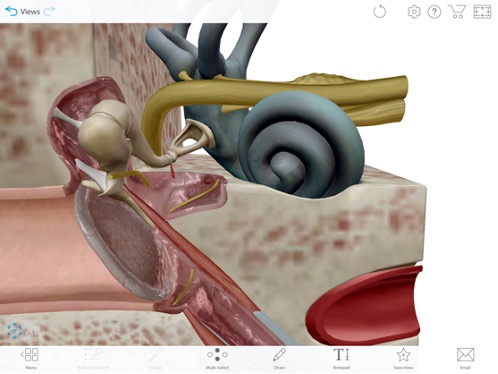

The sound waves entering through the outer ear canal are mechanically softened by the intricate mechanism formed by the timpanic membrane and the ossicles. The movement of the ossicles may be stiffened by two muscles. The stapedius muscle, the smallest skeletal muscle in the body, connects to the stapes and is controlled by the facial nerve; the tensor tympani muscle is attached to the upper end of the medial surface of the handle of malleus and is under the control of the medial pterygoid nerve which is a branch of the mandibular nerve of the trigeminal nerve. These muscles contract in response to loud sounds, thereby reducing the transmission of sound to the inner ear. This is called the acoustic reflex. (Reference and Graphics: Human Anatomy Atlas (2021), Argosi Publishing)

When conference interpreters work in the booth, most of us move the right side of our headsets back to free the ear canal to monitor our output. The membrane of that side now sends intense low-frequency physical vibrations into your cranium.

Notice how the cochlea (the black snaily bit) is incredibly well protected from sound waves entering the ear canal, however is cemented your cranial bone. The cochlea is Important because this is where you lose your hearing during web-based RSI.

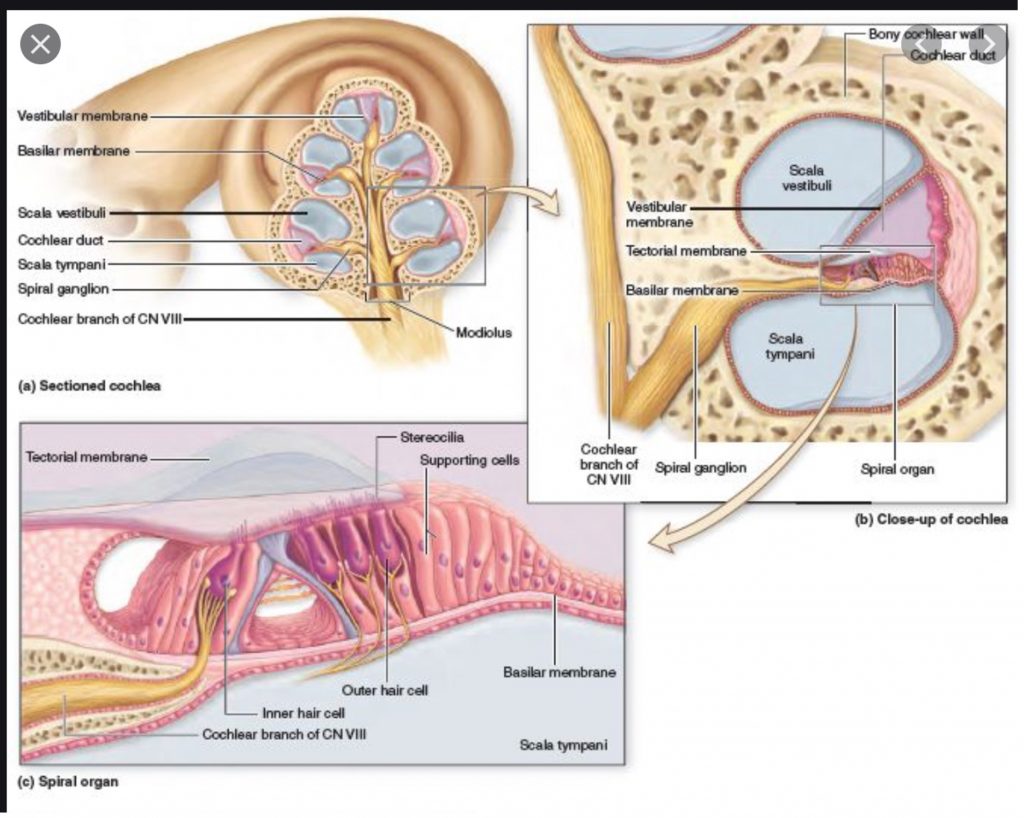

The walls of the hollow

cochlea are made of bone, with a thin, delicate lining of epithelial tissue.

(…)This continuation at the helicotrema allows fluid being pushed into the

vestibular duct by the oval window to move back out via movement in the

tympanic duct and deflection of the round window; since the fluid is nearly

incompressible and the bony walls are rigid, it is essential for the conserved

fluid volume to exit somewhere.

The hair cells are arranged in four rows in the organ of Corti along the entire length of the cochlear coil. Three rows consist of outer hair cells (OHCs) and one row consists of inner hair cells (IHCs). The inner hair cells provide the main neural output of the cochlea. The outer hair cells, instead, mainly receive neural input from the brain, whichi nfluences their motility as part of the cochlea’s mechanical pre-amplifier. (…)

The cochlea is filled with a watery liquid, the endolymph, which moves in response to the vibrations coming from the middle ear via the oval window. As the fluid moves, the cochlear partition (basilar membrane and organ of Corti) moves; thousands of hair cells sense the motion via their stereocilia, and convert that motion to electrical signals that are communicated via neurotransmitters to many thousands of nerve cells. These primary auditory neurons transform the signals into electrochemical impulses known as action potentials, which travel along the auditory nerve to structures in the brainstem for further processing. (Reference & Graphic: Wikipedia / Britannica).

So let us summarise what we know: Compounding the age-related loss of stereocilia and adding to this the professional deterioration of Conference Interprerters’ hearing which is being exposed to more wear and tear under stressful conditions we are now asked to do the following:

Work with equipment that does not meet the profession’s technical standards:

Phones, TelcoPrograms such as CisCo WebeX, Skype for Business cut frequency ranges at between 5,5khz and 7,5khz instead of 15khz. This means that these systems are typically used at max volume, because they were not designed to accommodate the fact that SI speak at the same time as the speaker. Our voice overlaps occur up to 7,5khz, so taking the range from 7,5-15khz away makes us practically deaf during SI. These systems are not suitable for SI.

The Heidelberg University quest for systems that could be used for virtual classes with extensive tests of VideoChat programs such as Zoom, WebEx, Skype and heiCONF (Bluebutton) and many others: “These systems are pumping around frequencies at about 5,5-7khz and bombard the ear with artefacts.” An empirical scientific study pending, we cannot risk using them for our Sim classes.

While your timpanic membrane struggles to hear consonants, the bone conduction through you skull very likely impacts the fragile stereocilia in your cochlea.

You will presumably damage your hearing immediately, however you may not notice the dramatic deterioration for another 10 years, when age-related deterioration of the stereocilia sets in.

Please do not work in SI from a non ISO compliant system with no limiter and sound that comes over a phone or VideoChat system.

Update Feb. 2021: Platform Companies are adding new features, which look promising: in the advanced options, some of the frequency range limiting processing can be switched off, notably noise cancelling (ANC) and echo cancelling, which have prevented ISO certification during the round tests. We will have a look at the new features, including the automatic transcription/voice to text/CCP generation on the speaker side, which looks promising for use with AI based CAI/augmented interpreting. Systems for voice to text have never worked satisfactorily when used on the interpreter side of platforms, which seems indicative of the speech intelligibility attained.

References: Round Tests for ISO Compliance: https://aiic.org/document/4862/Report_technical_study_RSI_Systems_2019.pdf

https://aiic.org/document/9506/THC%20Test%20RSI%20platforms%202020.pdf

Recommendations:

https://vkd.bdue.de/fileadmin/verbaende/vkd/Dateien/PDF-Dateien/RSI-Checkliste_VKD_AIIC.pdf

References for audiology and acoustic shock prevention:

https://www.audiologyonline.com/articles/acoustic-shock-injury-real-or-1172

https://www.jabra.com.de/support-page/hearing-protection

https://aiic.org/company/roster/companyRosterDetails.html?companyId=11999&companyRosterId=64

https://www.cdc.gov/niosh/docs/96-110/pdfs/96-110.pdf?id=10.26616/NIOSHPUB96110

Empirical Study Project: Our colleagues in Canada, after a study on the prevalence of acoustic shock have been able to argue for extensive work undertaken to retrofit the entire sound chain including remote delegates’ systems safe for simultaneous interpretation, i.e. as ISO compliant as possible with this hybrid system, which includes consoles with limiters.

Now there is ground-breaking empirical work commissioned to gather facts on acoustic fatigue and cognitive load conflicts and -increase when working with RSI platform sound:

https://buyandsell.gc.ca/procurement-data/tender-notice/PW-ZF-521-37794

The Translation Bureau is seeking an empirical research study to explore and investigate fatigue and load during audio and video remote simultaneous interpreting to inform decisions related to service offerings, technical requirements, working conditions, with a view to improving the service quality to clients and Canadians. The research will provide data on fatigue and load during audio- and video remote simultaneous interpreting in order to compare and contrast the effect of input signal modality and input signal quality on the interpreters performance and wellbeing. In order to systematically study the parameters identified above, and answer the research question if and what impact signal modality and signal quality have on the interpreters performance and wellbeing, it will require within-subject laboratory experiment and test a cohort of 24 professional conference interpreters in both distance interpreting modalities. More specifically, the study will quantify the impact of three independent variables (modality: ARI, VRI; signal-to-noise-ratio: high, low; and task: shadowing, interpreting), on three dependent variables (quality: accuracy and ratings; load: eye-blinks, pupil dilation, filled pauses; and fatigue: standardized questionnaires).

This is one of the methods we plan to measure possible hearing deterioration using SI in non-ISO compliant contexts – found by researcher Sarah Hickey: https://www.nimdzi.com/hearing-loss-and-acoustic-shock-a-silent-threat-for-interpreters/amp/